Loading breadcrumbs...

On Robots, SEO, and Automation

One of the great joys of running a little digital café, aside from serving piping-hot content, is watching new customers (search engines) stroll in. But as traffic picks up, it’s time to ensure everyone knows the house rules, where everything is, and how best to move around. That’s where the robots.txt, sitemap, and a touch of security come in—like a friendly (but firm) host. In my quest for better SEO, I rolled up my sleeves, brewed some fresh code, and made my café more welcoming (and discoverable) than ever.

Serving Up the Robots.txt

You know how a café sometimes has a friendly sign on the door that lays out the rules? (No outside food, keep your pets leashed, watch your step, etc.) That’s essentially your robots.txt file.

What Is It?

It’s a simple text file that tells search engines and other bots which parts of your site to explore, and which to leave alone.Why It Matters:

If you have private sections or large swaths of content that don’t need to be indexed, the robots.txt keeps the chaos in check. In café terms, it’s like telling your staff where the “Employees Only” areas are.

Installing the robots.txt was straightforward. I created the file, placed it at the root of my website, and voila—my robots (search engines, that is) now have clear instructions on where to go (and where not to go).

Sitemaps and Menus

Once the door sign (robots.txt) was up, I needed a way to guide my digital visitors around the café. That’s where sitemaps shine. Imagine a café menu: it lists every dish, where to find it, and sometimes a bit of detail.

Generating the Sitemap:

I built a script that automatically creates a sitemap every time my website is updated. This ensures the menu is always fresh, with no stale or missing items.Accessibility for All:

The sitemap also helps people using screen readers or specialized browsers to navigate. In real cafés, accessibility might mean having wide aisles and braille menus. Online, it’s about structured content that’s easy to parse.

Automating the Process

I was determined to make this as hands-off as possible. After all, automation is part of the fun in running a digital café:

- Build the Script:

Write a script that scans the site for new or updated pages and automatically generates a fresh sitemap. - Docker Shuffle:

Since my site uses Docker containers, I needed to move that shiny new sitemap from the Svelte container (where it’s generated) to the Nginx container (where it’s served). - Continuous Integration:

Every time I deploy a new build, the script runs, the sitemap gets updated, and all I have to do is watch the magic happen.

Dockers: A Shipping Solution

Speaking of Docker: it’s like having a kitchen staff that delivers each part of the meal in neatly packed containers. My Svelte app is in one container, and my Nginx server is in another. While it might sound complicated, Docker keeps things modular and clean.

- Svelte Container:

The worker bees baking the content. - Nginx Container:

The front-of-house staff serving it up.

When the sitemap is ready, I package it up and ship it from the Svelte container to the Nginx container. That’s how the final “menu” is always up to date for visitors to see.

Security Bouncer: Banning Bad Actors

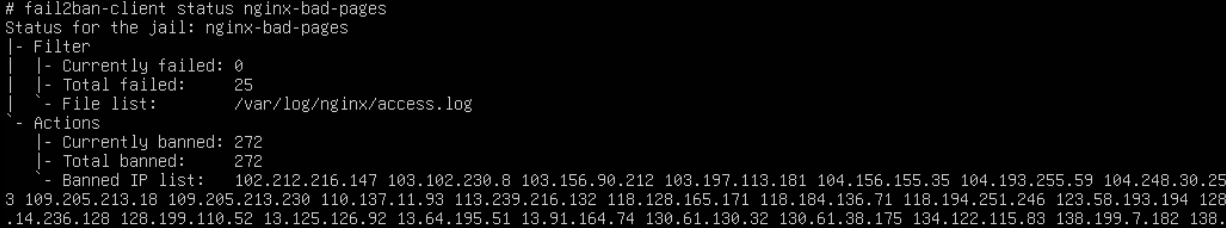

No café is complete without a vigilant bouncer to ensure safety for everyone. In my case, I wrote a script (let’s call it nginx-bad-pages) that’s always on the lookout for suspicious visitors. These might be folks (or bots) poking around for:

.envFiles (sensitive environment variables)admin/routeswp-loginor other WordPress-related routes- Any other obviously off-limits endpoints

When my café’s bouncer script catches repeated attempts to access these “bad endpoints,” it steps in and bans those visitors. That way, legitimate customers (and search engines) can enjoy the café without worrying about shady characters trying to slip into the back room.

Accessibility: A Café for Everyone

A well-structured website is like a café with wide, well-lit aisles, clearly marked restrooms, and a friendly staff. Visitors can come and go easily, and nobody feels left out. In web terms, that means:

- Clear HTML Semantics:

Use headings (<h1>,<h2>, etc.), alt text for images, and ARIA labels for screen readers. - Readable Design:

Enough contrast, legible font sizes, and straightforward navigation. - Keyboard Accessibility:

Ensure users can navigate entirely with the keyboard if needed.

By integrating these practices, my café (website) welcomes everyone, from robots to humans alike.

The Espresso Shot of Knowledge

To recap the big takeaways:

- Robots.txt: Your café’s “house rules” for bots.

- Sitemap: The “menu” that guides customers (both robots and humans) to every nook and cranny of your café.

- Automation: Scripts and Docker working behind the scenes to keep everything updated without you constantly fussing in the kitchen.

- Security Bouncer: A script that bans shady visitors poking at sensitive or off-limits endpoints.

- Accessibility: Ensuring your café is open and navigable for all, from curb to counter (and from homepage to contact form).

With these steps, my digital café is more inviting than ever—both for search engine crawlers and for real human visitors. The robots know exactly where they can roam, customers can easily find what they need, and I don’t have to chase them around with fresh menus in hand. That’s the power of SEO, automation, security, and a little café charm.

So here’s to raising a cup of coffee (or tea) to all the new visitors that will trickle in, whether they’re curious humans or tireless search engine crawlers. Cheers to smooth operations, satisfied guests, and a website that’s built to last!